Roombas for Home Security

- Laura Tramontozzi

- Aug 5, 2022

- 3 min read

Updated: Sep 20, 2024

Since iRobot products rely on cameras to generate maps of the home and avoid obstacles, we explored if users would be interested in using their Roombas as autonomous mobile security devices.

Role & Team: Senior UX Designer, iRobot

Tools: Figjam, Figma

Skills: Early UX Exploration

Deliverables: Rough sketches to talk through possibilities with the team & users

The Challenge

How can we insert Home Monitoring into the current iRobot app, seamlessly for iRobot users who opt in to a Home Monitoring subscription?

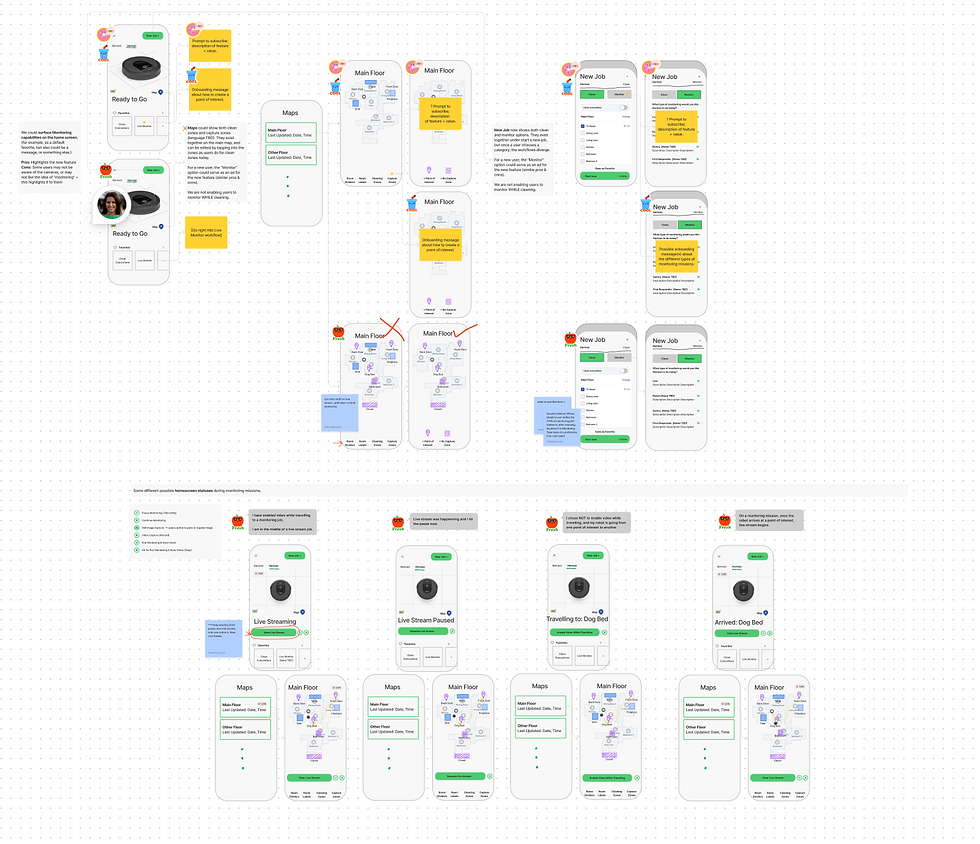

I drew up a bunch of sketches to explore how we may be able to incorporate home security features within the current iRobot app architecture. I focused on 3 areas: Creating points of interest, fine tuning points of interest & mission control.

Creating points of interest

Points of Interest were defined as areas of the home users want to track and view with their Roombas. Users could create a point of interest by dropping a pin on already existing maps of their home.

Fine tuning points of interest

Users could then send the robot to the point of interest and refine it based on how the robot captured at that location.

Some Detailed Questions about Points of Interest:

How do we define the view?What is in the screen capture for a particular point of interest?

How do we define the zoom? How far from the “Point” is the robot when it captures a defined point of interest?

What robot positions are possible to capture desired views?

What is necessary to define in order to save a point of interest, and what can be left as part of fine tuning?

When do users want to see a preview?Previews take time to create (requires robot nav)

Is there value to creating a point of interest WITHOUT fine tuning, or is it too broad as it is today?

Can we/ How can we define a point of interest on the map without having the robot travel to the location and show a preview?

Initiating & controlling a Home Monitoring Mission

Users need a way to control the robot and send it to view a point of interest. We experimented with reusing as many components from cleaning controls as possible.

Some Nuances re: The Home Screen, Robot Control & Negotiating with Eng:

We already have user controls for the robot with regards to cleaning: Pause, End, Resume.

Engineering suggests simply mirroring the controls for cleaning, and adding a button for View Live Stream - we suspect this may be confusing for users since the same controls will function differently depending on the context.

Is this the best solution for home monitoring control, or is there a better set of controls we could surface for the user at different points in the mission?

How can we distinguish between action for a cleaning mission vs. a live stream?

Some concerns about adding to an already complicated mapping experience

It is impossible to put everything on a single map. We should explore the purpose of map visualizations at different points in the user journey and in different parts of the app before smushing everything into one confusing experience (which we later did, separately from this project).

Consider: the goal of the smart map (cleaning) isn't the same as the monitoring map (monitoring).

Outstanding Question: How can we most simply alter the UX of the main map to enable 2 views (clean, monitoring)?

NEXT STEPS

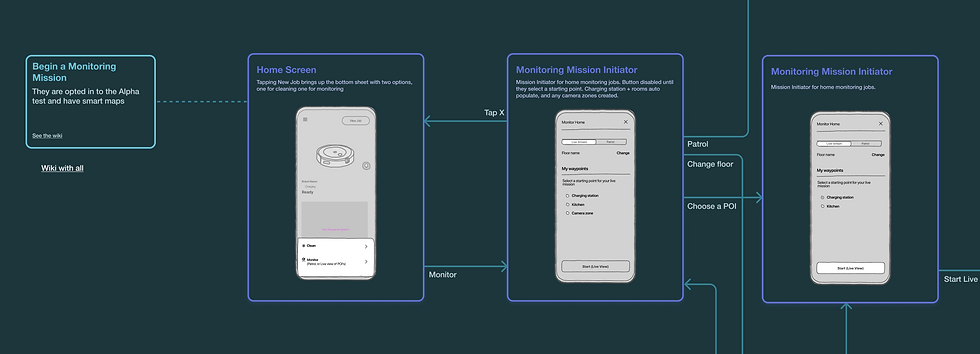

We built a home monitoring experience to test with Alpha users that was independent from the cleaning experience.

This enabled us to:

Keep the primary cleaning interface simple and straightforward

Quell privacy concerns of our customers by limiting Home Monitoring control to manual driving

Here are some slightly higher fidelity sketches that ultimately were transformed into an alpha experience.

To start, the easiest way for a user to create a home monitoring zone was to dup the UI we use for clean and keep out zones. This isn't ideal, but it is simple, and good enough to use while testing other, more risky functionality.

Start a new home monitoring mission was built as is its own separate experience:

Robot control is a manual drive interface. This proved to be quite challenging due to the strange two wheeled steering style of the robot plus the inevitable network latency.

WRAP UP

We ended up refining & releasing these ideas first in Alpha, then creating and testing 5 variations of with thousands of Beta users to eventually develop an intuitive interface that was easy to control. Ultimately, this functionality while available, has not been prioritized as a key feature in production, as we focus most of our efforts on intelligent cleaning solutions.\